aman ai primers|aman.ai • exploring the art of artificial intelligence : Baguio “GPTZero computes perplexity values. The perplexity is related to the log-probability . Welcome to DLSU-D Online Application To start your application to De La Salle University-Dasmariñas, register an account for the DLSU-D Admissions Portal using a valid email address. Cavite : +63 (46) 481.1900 | Manila : +63 (2) 8779.5180Morongo Casino Resort & Spa 49500 Seminole Dr. Cabazon, California 92230 Toll free: 1-800-252-4499 Local: 951-849-3080 Email:

[email protected] Select Language: Eng Esp Ch

aman ai primers,Aman's AI Journal • Primers • AI. Primers • AI. Overview. Here’s a hand-picked selection of articles on AI fundamentals/concepts that cover the entire process of building neural .For e.g.: If we have binary class data which form a ring-like pattern (inner and outer .

aman.ai • exploring the art of artificial intelligenceThe runtime of Transformer architecture is quadratic in the length of the input .Primers • Transformers. Background: Representation Learning for NLP; Enter .

“GPTZero computes perplexity values. The perplexity is related to the log-probability .aman ai primersKey Benefits. NTK-Aware Method Perspective. Summary. Related: Traditional DBs v/s Vector DBs. When not to use Vector DBs? Knowledge Graphs with LLMs: Best of Both .Aman's AI Journal | Course notes and learning material for Artificial Intelligence and Deep Learning Stanford classes. Distilled AI Back to aman.ai Primers • Math

GPT-1 was released in 2018 by OpenAI. It contained 117 million parameters. Trained on an enormous BooksCorpus dataset, this generative language model was able to learn large .Prompt engineering is a technique for directing an LLM’s responses toward specific outcomes without altering the model’s weights or parameters, relying solely on strategic .This primer examines several tasks that can be effectively addressed using Natural Language Processing (NLP). Named Entity Recognition (NER) It focuses on identifying .

Distilled AI. Back to aman.ai. search. Primers • PyTorch Introduction Getting Started Creating a Virtual Environment Using a GPU? Recommended Code Structure Running .Primers. AI Fundamentals.

aman_ai_primers_numpy_ - Free download as PDF File (.pdf), Text File (.txt) or view presentation slides online. numpy

Dependency Parsing is a critical task in NLP that involves analyzing the grammatical structure of a sentence. It identifies the dependencies between words, determining how each word relates to others in a sentence. This analysis is pivotal in understanding the meaning and context of sentences in natural language texts.Purpose each file or directory serves: data/: will contain all the data of the project (generally not stored on GitHub), with an explicit train/dev/test split. experiments: contains the different experiments (will be explained in the .BERT (and all of its variants such as RoBERTa, DistilBERT, ALBERT, etc.), XLM are examples of AE models. The pros and cons of an AE model are as follows: Pros: Context dependency: The AR representation hθ(x1: t − 1) h θ ( x 1: t − 1) is only conditioned on the tokens up to position t. t.Aman's AI Journal | Course notes and learning material for Artificial Intelligence and Deep Learning Stanford classes. . This primer presents a recipe for learning the fundamentals and staying up-to-date with GNNs. Graph Neural Networks (GNNs) are advanced neural network architectures designed to process graph-structured data, which are .From the Wikipedia article on Backprop, Backpropagation, an abbreviation for “backward propagation of errors”, is a common method of training artificial neural networks used in conjunction with an optimization method such as gradient descent. The method calculates the gradient of a loss function with respect to all the weights in the network.In other words, ROC curves are appropriate when the observations are balanced between each class, whereas precision-recall curves are appropriate for imbalanced datasets. In both cases, the area under the curve (AUC) can be used as a summary of the model performance. Metric. Formula.

Overview. This article investigates over how the Vision Transformer (ViT) works by going over the minor modifications of the transformer architecture for image classification. We recommend checking out the primers on Transformer and attention prior to exploring ViT. Transformers lack the inductive biases of Convolutional Neural Networks (CNNs .Parameter-efficient fine-tuning is particularly used in the context of large-scale pre-trained models (such as in NLP), to adapt that pre-trained model to a new task without drastically increasing the number of parameters. The challenge is this: modern pre-trained models (like BERT, GPT, T5, etc.) contain hundreds of millions, if not billions .

To summarize the different steps, we just give a high-level overview of what needs to be done in train.py. #1. Create the iterators over the Training and Evaluation datasets train_inputs=input_fn(True,train_filenames,train_labels,params)eval_inputs=input_fn(False,eval_filenames,eval_labels,params)#2.

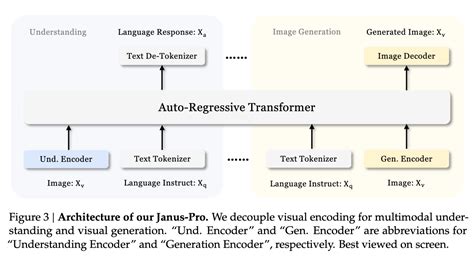

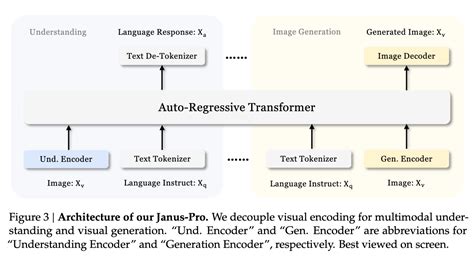

Overview. Vision-Language Models (VLMs) integrate both visual (image) and textual (language) information processing. They are designed to understand and generate content that involves both images and text, enabling them to perform tasks like image captioning, visual question answering, and text-to-image generation.Related primers: Matplotlib and SciPy. Arrays. A NumPy array is a grid of values, all of the same type, and is indexed by a tuple of non-negative integers. The rank of an array is the number of dimensions it contains. .This paper by Balaguer et al. from Microsoft, delves into two prevalent approaches for incorporating proprietary and domain-specific data into Large Language Models (LLMs): Retrieval-Augmented Generation (RAG) and Fine-Tuning. RAG augments prompts with external data, whereas Fine-Tuning embeds additional knowledge directly into the model.aman ai primers aman.ai • exploring the art of artificial intelligenceThe loss functions are divided into those used for classification and regression tasks. The following figure summarizes a few common loss functions and their use cases image source: AiEdge.io. To get a quick .

A spectrogram is a time-frequency representation of the speech signal. A spectrogram is a tool to study speech sounds (phones). Phones and their properties are visually studied by phoneticians using a spectrogram. Hidden Markov Models implicitly model spectrograms for speech to text systems.Art and Design: Generative AI has been used to create original pieces of art and design. For example, GANs have been used to generate unique and realistic-looking images, from faces of non-existent people to artwork that has been auctioned for high prices. Text Generation: In NLP, generative models have been used to create human-like text.

Instead, you would now train a network to minimize the cost. Jregularized = Jcross−entropy + λJL1orL2 J r e g u l a r i z e d = J c r o s s − e n t r o p y + λ J L 1 o r L 2. where, λ λ is the regularization strength, which is a hyperparameter. JL1 = ∑all weights wk|wk| J L 1 = ∑ all weights w k | w k |.This paper by Riquelme et al. from Google Brain introduces the Vision Mixture of Experts (V-MoE), a novel approach for scaling vision models. The V-MoE is a sparsely activated version of the Vision Transformer (ViT) that demonstrates scalability and competitiveness with larger dense networks in image recognition tasks.Primers • Xavier Initialization. The Importance of Effective Initialization. The Problem of Exploding or Vanishing Gradients. Case 1: a Too-large Initialization Leads to Exploding Gradients. Case 2: a Too-small Initialization Leads to Vanishing Gradients. Visualizing the Effects of Different Initializations.

aman ai primers|aman.ai • exploring the art of artificial intelligence

PH0 · aman.ai • exploring the art of artificial intelligence

PH1 · Aman's AI Journal • Primers • Prompt Engineering

PH2 · Aman's AI Journal • Primers • Overview of Large Language Models

PH3 · Aman's AI Journal • Primers • Math

PH4 · Aman's AI Journal • Primers • Generative Pre

PH5 · Aman's AI Journal • Primers • AI

PH6 · Aman's AI Journal • Natural Language Processing • NLP Tasks

PH7 · Aman Ai Primers Pytorch

PH8 · Aman Ai Primers Numpy